Azure Data Lake Storage Generation 2 (ADLS Gen 2) has been generally available since 7 Feb 2019. Azure Databricks is a first-party offering for Apache Spark. Many customers want to set ACLs on ADLS Gen 2 and then access those files from Azure Databricks, while ensuring that the precise / minimal permissions granted. In the process, we have seen some interesting patterns and errors (such as the infamous 403 / “request not authorized” error). While there is documentation on the topic, I wanted to take an end-t0-end walkthrough the steps, and hopefully with this you can get everything working just perfectly! Let’s get started…

Setting up a Service Principal in Azure AD

To do this, we first need to create an application registration in Azure Active Directory (AAD). This is well documented in many places, but for reference, here is what I did.

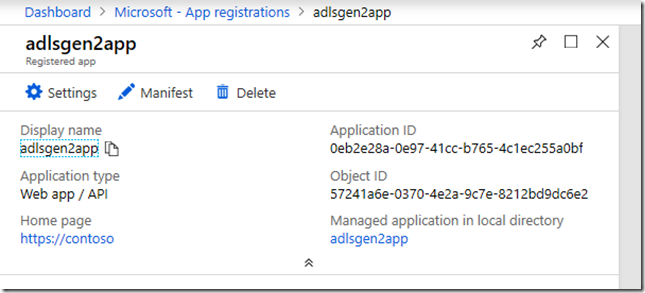

I created a new app registration from the Portal and called it ”adlsgen2app”:

From the next screenshot below, note the Application’s ID: 0eb2e28a-0e97-41cc-b765-4c1ec255a0bf. This is also sometimes referred to as the “Client ID”. We are going to ignore the Object ID of the application, because as you will see we will later need the Object ID of the Service Principal for that application within our AAD tenant. More on that soon.

We then proceed to create a secret key (“keyfordatabricks”) for this application (redacted in the screenshot below for privacy reasons):

Note down that key in a safe place so that we can later store in in AKV and then eventually reference that AKV-backed secret from Databricks.

Setting up a AKV-backed secret store for Azure Databricks

In order to reference the above secret stored in Azure Key Vault (AKV), from within Azure Databricks, we must first add the secret manually to AKV and then associate the AKV itself with the Databricks workspace. The instructions to do this are well documented at the official page. For reference, here is what I did.

As mentioned, I first copied the Service Principal secret into AKV (I had created a AKV instance called “mydbxakv”):

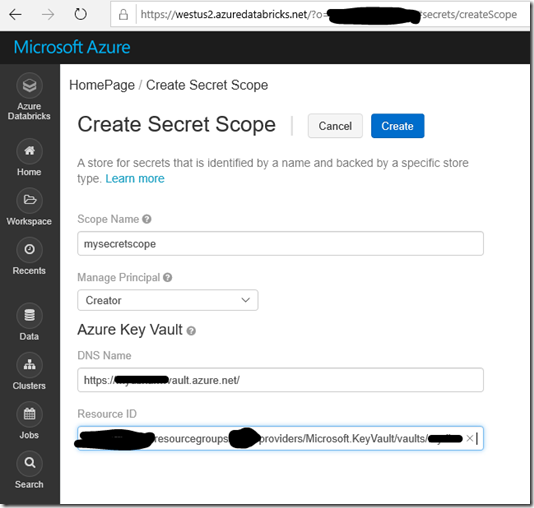

Then, I followed the steps in the Azure Databricks documentation to create an AKV-backed secret scope within Databricks, and reference the AKV from there:

Granting the Service Principal permissions in ADLS Gen 2

This is probably the most important piece, and we have had some confusion here on how exactly to set the permissions / Access Control Lists in ADLS Gen 2. To start with, we use Azure Storage Explorer to set / view these ACLs. Here’s a screenshot of the ADLS Gen 2 account that I am using. Under that account, there is a “container” (technically a “file system”) called “acltest”:

Before we can grant permissions at ADLS Gen 2 to the Service Principal, we need to identify its Object ID (OID). To do this, I used Azure CLI to run the sample command below. The GUID passed to –id is the Application ID which we noted a few steps ago.

az ad sp show --id 0eb2e28a-0e97-41cc-b765-4c1ec255a0bf --query objectId

The value that is returned by the above command is the Object ID (OID) for the Service Principal:

79a448a0-11f6-415d-a451-c89f15f438f2

The OID for the Service Principal has to be used to define permissions in the ADLS Gen 2 ACLs. I repeat: do not use the Object ID from the Application, you must use the Object ID from the Service Principal in order to set ACLs / permissions at ADLS Gen 2 level.

Set / Manage the ACLs at ADLS Gen 2 level

Let’s see this in action; in the above “acltest” container, I am going to add permission for this service principal on a specific file that I have to later access from Azure Databricks:

Sidebar: if we view the default permissions on this file you will see that only $superuser has access. $superuser represents the access to the ADLS Gen 2 file system via. storage key, and is only seen when these containers / file systems were created using Storage Key authentication.

To view / manage ACLs, you right click on the container / folder / file in Azure Storage explorer, and then use the “Manage Access” menu. Once you are in Manage Access dialog, as shown in the screenshot below, I have copied (but not yet added) the OID for the service principal that we obtained previously. Again – I cannot emphasize this enough – please make sure you use the Object ID for the Service Principal and not the Object ID for the app registration.

Next I clicked the Add button and also set the level of access to web_site_1.dat (Read and Execute in this case, as I intend to only read this data into Databricks):

Then I clicked Save. You can also use “Default permissions” if you set ACLs on top-level folders, but do remember that those default permissions only apply to newly created children.

Permissions on existing files

Currently for existing files / folders, you have to grant desired permissions explicitly. Another very important point is that the Service Principal OID must also have been granted Read and Execute at the root (the container level), as well as any intermediate folder(s). In my case, the file web_site_1.dat is located under /mydata. So note that I have also to add the permissions at root level:

Then at /mydata level:

In other words, the whole chain: all the folders in the path leading up to and including the (existing) file being accessed, must have permissions granted for the Service Principal.

Using the Service Principal from Azure Databricks

Firstly, review the requirements from the official docs. We do recommend using Databricks runtime 5.2 or above.

Providing the ADLS Gen 2 credentials to Spark

In the below walkthrough, we choose to do this at session level. In the below sample code, note the usage of the Databricks dbutils.secrets calls to obtain the secret for the app, from AKV, and also note the usage of the Application ID itself as the “client ID”):

spark.conf.set("fs.azure.account.auth.type", "OAuth")

spark.conf.set("fs.azure.account.oauth.provider.type", "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider")

spark.conf.set("fs.azure.account.oauth2.client.id", "0eb2e28a-0e97-41cc-b765-4c1ec255a0bf") # This GUID is just a sample for this walkthrough; it needs to be replaced with the actual Application ID in your case

spark.conf.set("fs.azure.account.oauth2.client.secret", dbutils.secrets.get(scope = "mysecretscope", key = "adlsgen2secret"))

spark.conf.set("fs.azure.account.oauth2.client.endpoint", "https://login.microsoftonline.com/<<AAD tenant id>>/oauth2/token")

Note that the <<AAD tenant id>> placeholder above has also got to be substituted with the actual GUID for the AAD tenant. You can get that from the Azure Portal blade for Azure Active Directory.

Alternate way of configuring the ADLS Gen 2 credentials

In the previous code snippet, the service principal credentials are setup in such a way that they become the default for any ADLS Gen 2 account being accessed from that Spark session. However, there is another (potentially more precise) way of specifying these credentials, and that is to suffix the Spark configuration item keys with the ADLS Gen 2 account name. For example, imagine that “myadlsgen2” is the name of the ADLS Gen 2 account that we are using. The suffix to be applied in this case would be myadlsgen2.dfs.core.windows.net. Then the Spark conf setting would look like the below:

spark.conf.set("fs.azure.account.auth.type.myadlsgen2.dfs.core.windows.net", "OAuth")

spark.conf.set("fs.azure.account.oauth.provider.type.myadlsgen2.dfs.core.windows.net", "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider")

spark.conf.set("fs.azure.account.oauth2.client.id.myadlsgen2.dfs.core.windows.net", "<<application ID GUID>>")

spark.conf.set("fs.azure.account.oauth2.client.secret.myadlsgen2.dfs.core.windows.net", dbutils.secrets.get(scope = "mysecretscope", key = "adlsgen2secret"))

spark.conf.set("fs.azure.account.oauth2.client.endpoint.myadlsgen2.dfs.core.windows.net", "https://login.microsoftonline.com/<<AAD tenant id>>/oauth2/token")

This method of suffixing the account name was enabled by this Hadoop fix and also referenced here.

“Happy Path” testing

If all the steps were done correctly, then when you run code to read the file:

df = spark.read.csv("abfss://acltest@<<storage account name>>.dfs.core.windows.net/mydata/web_site_1.dat").show()

…it works correctly!

But, what if you missed a step?

If you have missed any step in granting permissions at the various level in the folder hierarchy, it will fail with an 403 error like the below:

StatusCode=403 StatusDescription=This request is not authorized to perform this operation using this permission.

The same error, from a Scala cell:

If you happen to run into the above errors, double-check all your steps. Most likely you missed a folder or root-level permission (assuming you gave the permission to the file correctly). The other reason that I have seen is because the permissions using Azure Storage Explorer were set using the Object ID of the application, and not the Object ID of the service principal. This is clearly documented here.

Another reason for the 403 “not authorized” error

Until recently, this error would also occur even if the ACLs were granted perfectly, due to an issue with the ABFS driver. Due to that issue, customers had to add the Service Principal to the storage account contributor IAM permission on the ADLS Gen 2 account. Thankfully, this issue was fixed in HADOOP-15969 and the fix is now included in the Databricks runtime 5.x. You no longer need to grant the Service Principal any IAM permissions on the ADLS Gen 2 account – if you get the ACLs right!

Disclaimer

Good post.

Does setting the adls credentials in spark.conf guarantee that it’s only effective for the current user’s session and other users cannot use it to access ADLS gen2?

I tested in databricks and it seems to behave that way but need to understand the mechanism behind.

LikeLike

Hi James, yes your understanding is correct. Setting Spark conf items like this is session-specific. However, with Azure Databricks specifically one consideration to keep in mind is that the secret scope itself is workspace-scoped but other users need explicit permissions on the secret scope. In addition additional users also may not have access to your notebook by default. So all in all, I believe this method is quite effective in isolating the access to the data as compared to (let’s say) mount points which are not locked down by ACLs.

LikeLike

Great article, you saved the day!

LikeLike

is using Service Principals preferred to using managed identity in these scenarios?

LikeLike

Interesting question – currently, it is not possible to use MSIs or user-assigned identity with Azure Databricks clusters. Hence using SPNs is the only way to go.

LikeLike

Sorry, just saw this now. As Azure Databricks currently does not support using a managed identity for its clusters, Service Principals are one option – the other option is to use the logged-in user’s identity using the OAuth passthrough capability in Databricks.

LikeLike

[…] and these blogs: Access to Azure Data Lake Storage Gen 2 from Databricks Part 1: Quick & Dirty, Avoiding error 403 (“request not authorized”) when accessing ADLS Gen 2 from Azure Datab…, Analyzing Data in Azure Data Lake Storage Gen 2 using Databricks and the video Creating a […]

LikeLike

hello. you said for existing files / folders, you have to grant desired permissions explicitly. what about new files that come into the datalake? Right now when trying to run queries on them they fail due to file access issues. What do I need to do to enable SP access to any file in that directory?

Thanks

LikeLike

I tried option 4 , directly use Storage account keys of ADLS Gen2. Doesn’t seem to work. Does using Service Principle is the only workable solution ?

LikeLike

Option 4 in this https://docs.microsoft.com/en-us/azure/databricks/data/data-sources/azure/azure-datalake-gen2

LikeLike

Thanks for this post Arvind. It just resolved my Databricks to ADLS mount permission issues.

LikeLike

I Love you… saved my day! ❤

LikeLike