Welcome back! As promised last time around, here’s part 3 of the ‘Instant File Initialization’ (a.k.a. ‘IFI’) optimization for SQL Server series. If you missed the first two parts you should definitely take some time to read them first before resuming this one, because the previous posts cover a lot of things which would be assumed in this post:

- SQL Server and ‘Instant File Initialization’ Under the Hood – Part 1 (Windows fundamentals to understand IFI)

- SQL Server and ‘Instant File Initialization’ Under the Hood – Part 2 (How SQL uses IFI to speed up DB file operations)

With that background, this post will show you how IFI works (or rather – did not – in specific release!) in conjunction with the Buffer Pool Extension feature in SQL 2014 and above.

Buffer Pool Extension Overview

A few weeks ago, one of my colleagues asked this question internally: ‘does IFI (Instant File Initialization) have impact on creating the BPE?’. That question was the inspiration for this entire series of posts, so I thank him for that. In order to answer the question we first need to understand conceptually how the Buffer Pool Extension (BPE) feature works. The Books Online topic for BPE is a good starting point, but here is my summary:

- Think of the BPE as offering a ‘Level 2’ cache over the primary ‘Level 1’ i.e. classic Buffer Pool. If the DB page cannot be found in either L1 cache or L2 cache only then will it spill over to regular physical read from the data file.

- The BPE cache mechanism is actually based on a file which is preferably hosted in very fast storage, such as a SSD. For example, in an Azure D-Series VM context, the D: drive is an excellent place holder for the BPE and / or the TEMPDB – see this article from the SQL Server Product Team for some details.

- The BPE file is created when you ALTER SERVER CONFIGURATION command to enable BPE, or on SQL startup (if BPE was already configured.)

- The size of the BPE file can be up to a multiple of the ‘max server memory’ configured (the limit varies by SQL Edition) but we do not generally recommend more than 4x the max server memory setting. The reason I mentioning this here is to consider that the BPE file may be a very large file and depending on which buffer page we are saving into the BPE file, the offset of that file write operation may be quite large.

- Finally, the BPE file is deleted on SQL Server shutdown (and hence re-created on startup.)

BPE Internals

As with other operations in SQL, the writes into the BPE are optimized using the WriteFileGather() API. And based on which buffer page was being written to the file, the offset into the BPE file itself can be quite large. If we run a Process Monitor trace during the BPE file operations, we will notice that in SQL 2014 RTM there are a number of Synchronous Paging I/O (the second highlighted line in the below snipping) following a regular write operation to the BPE (which in the below screenshot is the first call to WriteFile at a offset of 196771840:

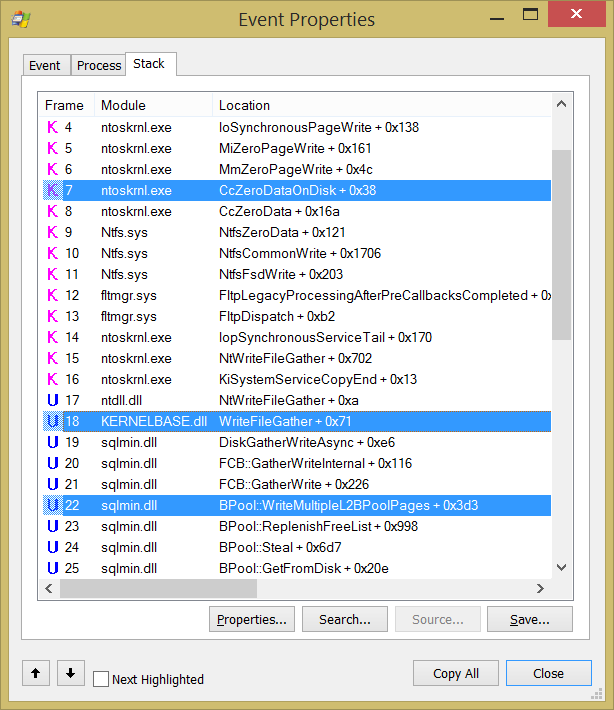

But as you learnt in the first two parts, writing into ‘random’ locations inside a file will cause the OS to silently ‘zero out’ the allocations from the previous valid data length to the new location, and indeed in the case of BPE writes as well, you will see the tell-tale signs of this:

Notice the calls to CcZeroDataOnDisk above, which represents the zero-stamping at a Windows level. Now this is synchronous and will cause the top-level WriteFileGather() to block till the allocations are zeroed up to the current data length. What this means is that the SQL task which caused the buffer fetch in the first place will be blocked a bit more than you would like.

Salvation!

From the above, it certainly looks like there is a potential gap / improvement possibility in SQL 2014 RTM because the calls to write into the BPE would be effectively synchronous and slow down operations. Thankfully, our development team has acted on the feedback from customers and has introduced a call to SetFileValidData() in SQL 2014 Service Pack 1. Specifically, this issue was the one fixed by using the SetFileValidData() API in the BPE initialization code!

So if you now capture a Process Monitor trace during BPE initialization in SQL 2014 SP1, here is what you will see:

Since the valid data length is being set proactively to the entire file size itself, initial writes to the BPE file at any random (high) offsets are no longer blocking due to underlying zeroing. This leads to a significant improvement for some customers.

Edit 28 May 2015: Now that SQL 2016 CTP2 is officially available, I’m glad to report that the above improvement is also present in SQL 2016 code base!

So with that, you now know one more way in which the IFI optimization is being used within SQL Server engine. There is one more place which we can talk about, but I’d like to challenge our readers to share their guesses on what that might be – please post your guesses as comments, and I will come back to you shortly with that information as well!